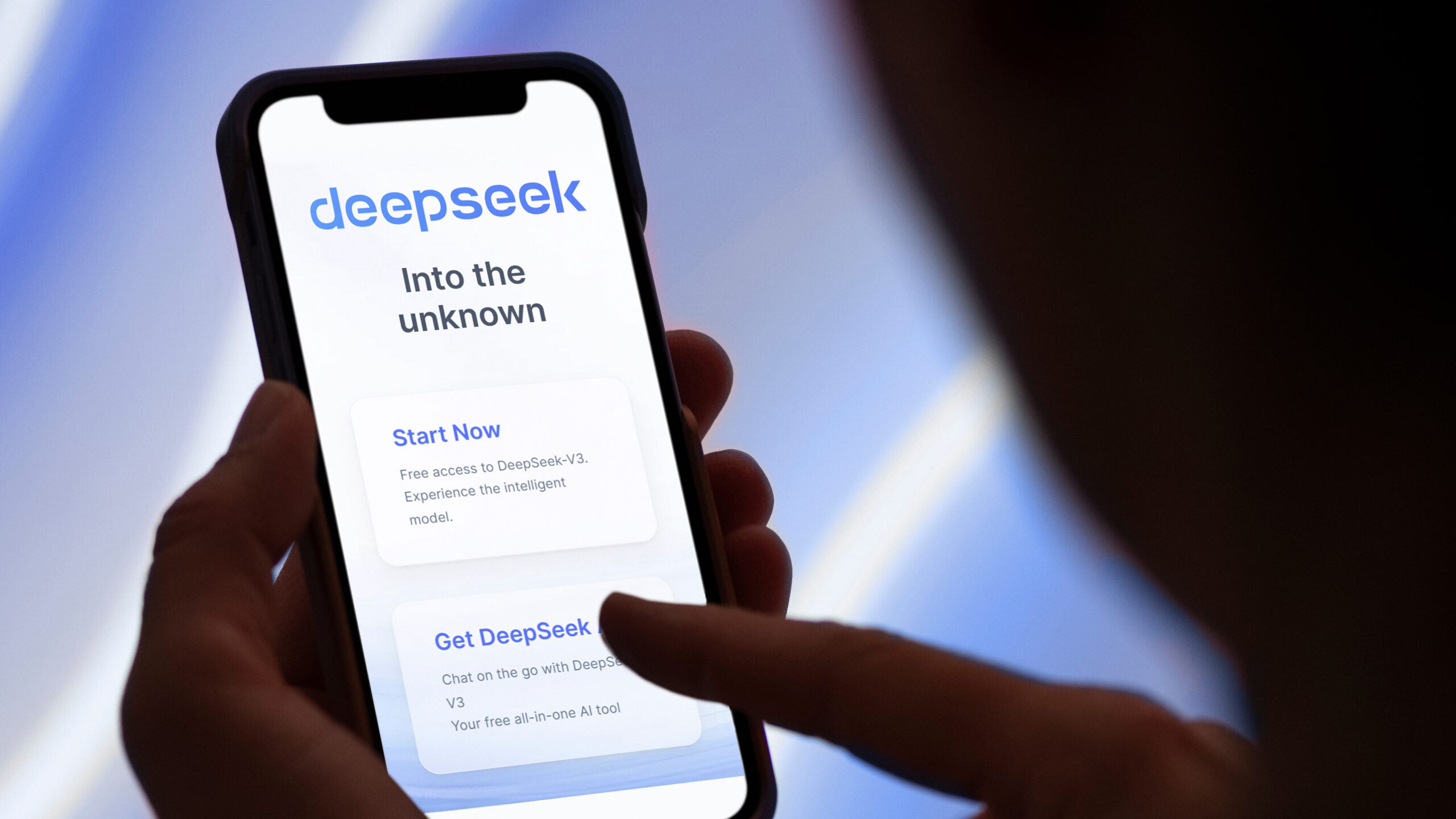

DeepSeek ‘incredibly vulnerable’ to attacks, research claims

The newly introduced AI model, DeepSeek, has raised concerns as it performed poorly in safety testing conducted by Cisco. The model, specifically the R1 version, demonstrated a 100% attack success rate and failed to block any harmful prompts, indicating a lack of security measures. Compared to its peers, such as GPT 1.5 Pro and Llama 3.1 405B, DeepSeek underperformed significantly, highlighting vulnerabilities in its design. This security breach has exposed over a million records and critical databases, prompting the need for enhanced security evaluation in AI development to prioritize safety.

In light of these findings, users of AI chatbots should exercise caution. Privacy concerns arise with models like ChatGPT and DeepSeek due to potential data collection practices. It is recommended to verify the legitimacy of chatbots, avoid sharing personal information, and maintain strong security practices, such as using strong passwords and regularly updating software. Additionally, be vigilant for any suspicious activity that may compromise personal data or lead to security breaches.

For more information in the tech world, check out the latest AI tools available, the UK government’s new AI code of practice, and the top malware removal software options on the market.