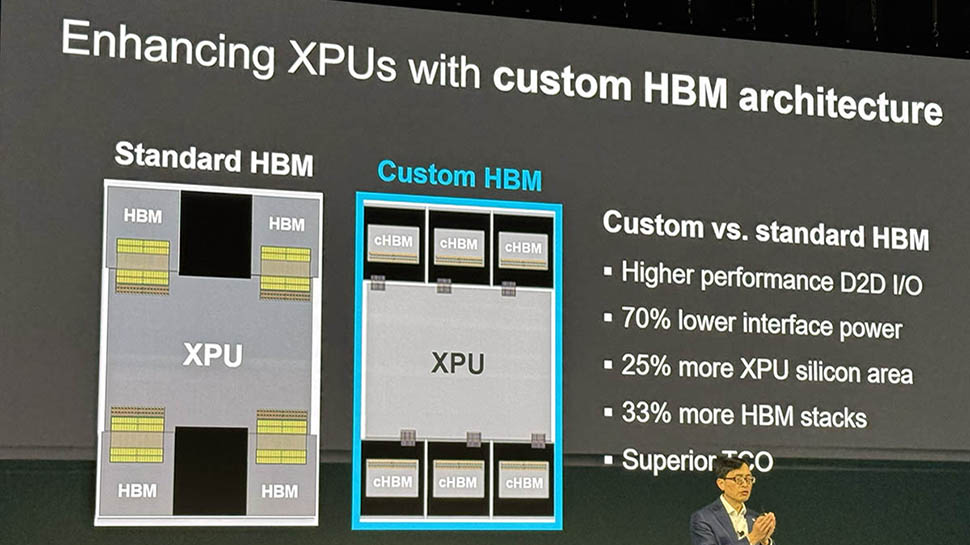

A $100bn tech company you’ve probably never heard of is teaming up with the world’s biggest memory manufacturers to produce supercharged HBM

Marvell Technology has introduced a groundbreaking custom HBM compute architecture in collaboration with memory giants Micron, Samsung, and SK Hynix, aiming to boost the efficiency and performance of XPUs in cloud infrastructure. By enhancing memory integration and optimizing interfaces between AI compute silicon dies and High Bandwidth Memory stacks, Marvell’s technology promises a significant reduction in power consumption and silicon real estate requirements, allowing for increased memory density and scalability.

This innovative approach moves away from traditional JEDEC protocols, offering tailored solutions for hyperscalers to manage energy demands effectively. The customized HBM stack not only improves performance but also lowers costs, catering to the evolving needs of advanced AI and cloud computing systems. The shift towards customization in memory solutions reflects a growing trend in the industry to provide highly efficient and scalable hardware for the AI era.

Collaborations between leading technology companies signal a shift towards more bespoke hardware solutions, ensuring cloud operators have the necessary tools to meet the demands of an increasingly AI-driven landscape. This development marks a significant step forward in revolutionizing the design and delivery of AI accelerators, with a focus on enhancing performance, power efficiency, and cost-effectiveness for hyperscale data centers.